The demise of the server

Most people have a specific association to the word server as it applies to information technology. They conjure up images of large, loud and fast machines that is designed to run 24/7 and handle server workloads. If asked whether servers tend to be faster than desktops and laptops 99% of people will most likely respond with an enthusiastic YES!

Unfortunately the past couple of years have seen a dramatic shift in processor performance. I am solely focussing on processor speed, not anything else. The recent trend has seen a proliferation of cores per CPU die as opposed to raw frequency bumps. We have hit 4GHz two years ago already, though even today Intel does not have a single CPU that can run at a base clock of 4GHz. Rather, we have 8-way systems that runs at 2.4GHz with each die containing up to 8 cores, for a total of 64 cores.

Now do not get me wrong - scaling up the amount of cores per server is a real way of increasing performance. Certain loads that are highly concurrent will most definitely see a dramatic increase in performance regardless of the clock speed. A simple (and naive) calculation shows that - all things being equal - a server with 64 x 2.4GHz CPU cores will complete a perfectly scalable task 32 times faster than a single core CPU running at 4.8GHz. But this is where things get murky. Most companies are not hosting a stock exchange database, or tries to render the next Avatar. Most businesses use servers for things like corporate databases, remote desktop servers, application servers, file servers etc.

Lets focus for a moment on both database servers and remote desktop servers. Most business applications still rely heavily on single core performance. This might be a database query, launching an accounting application, trying to print a CAD drawing etc. Most of these tasks have a critical path that is limited to the performance of one core, therefore the maximum per core performance is what counts most. Sure if you have 64 people performing the same task then the 64 core server will be able to handle those tasks concurrently. But I want to focus on the experience of a single person.

The most scalable processor from Intel right now is the E7-8870 10 core 8-way CPU costing $4600. A top end 2-way processor is the E5-2687W (the v2 is not yet available), running at 3.1GHz and has 8 cores for a total of 16 cores for 2 processors. Now lets forget about clock speed.

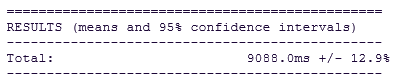

Lets look at a practical server I built for a client. This server cost $7000. It has two E5-2670 2.6GHz CPU's for a total of 16 cores.

Then lets take my MacBook Pro - A $2200 laptop that has an i7-3820QM processor running at 2.7GHz, having 4 cores.

That is right - I am going to compare a 1.5 year old laptop with a brand new high end server.

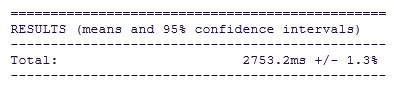

Kraken benchmark shows the following:

4 core MacBook Pro:

That means for a single user on both machines, for this kind of workload, the process will complete MORE THAN THREE TIMES FASTER on the laptop than on the server.

I feel that the industry is neglecting to boost per core performance in favour for scalability. This seriously affects the user's experience when they work on a remote server. It forces people to seriously reconsider using distributed computing in favour of thin clients and centralised configurations - something that is an absolute pain to manage. It gets harder to explain to companies why their expensive, shiny new server is slower than their new iPhone 5s. And frankly, I am at a loss explaining that myself.